Decoding InpharmD - Revolutionizing Drug Information with AI

Hospitals are grappling with the demanding task of accessing reliable drug information. Impediments such as paywalls and the limited time clinicians have to manually sift through literature pose significant challenges. On top of this, the healthcare industry is feeling the pinch of workforce shortages, supply chain hiccups, alongside a national 5% increase in drug spending.

Research indicates that embracing evidence-based practices, ones grounded in meticulous scientific research and data analysis, deliver tangible benefits. These include a reduction of 30% in medication errors, a decrease of 20-30% in adverse events, and the elimination of 20% of unnecessary medical procedures. These improvements transpire while simultaneously curtailing healthcare costs by 18% — all without any compromise on patient satisfaction.

InpharmD plays a crucial role in enabling evidence-based care by providing easy access to on-demand drug information. This digital service stitches together tailored, data-backed answers to both clinical inquiries and formulary requests, thanks to a powerful combo of artificial intelligence (AI) and pharmacy intelligence.

In addition, InpharmD acts as a trusted ally in promoting cost-effective decisions. By recommending therapeutic interchanges and protocols rooted in data, the platform facilitates a reduction in patient hospital stays - a factor that accounts for a hefty annual cost of $377.5 billion.

How it works

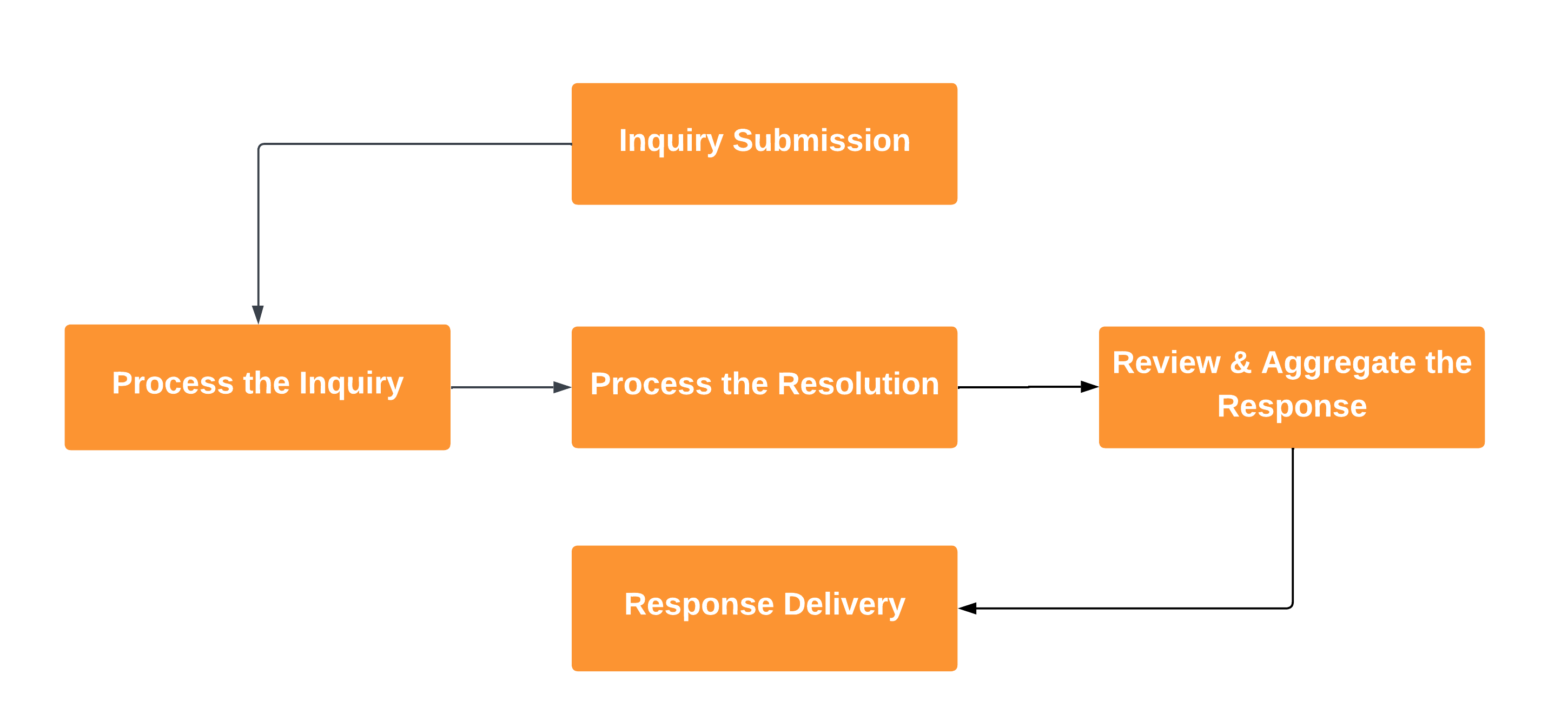

At a high level, InpharmD’s AI Assistant responds to an inquiry in five steps:

- Submission of Inquiry

- Processing of Inquiry

- Resolution Process

- Aggregation of Response

- Delivery of the Final Response.

Inquiry Submission

When a clinician asks InpharmD an inquiry, it immediately passes that query to InpharmD’s Large Language Models (LLM). The purpose of this LLM call is to determine the types of data needed to gather to answer the inquiry.

Inquiry-response requires both advanced reasoning capabilities (ie. to differentiate between a broader, general-medicine question versus an advanced evidence-based literature question ) and a deep understanding of the context of the inquiry.

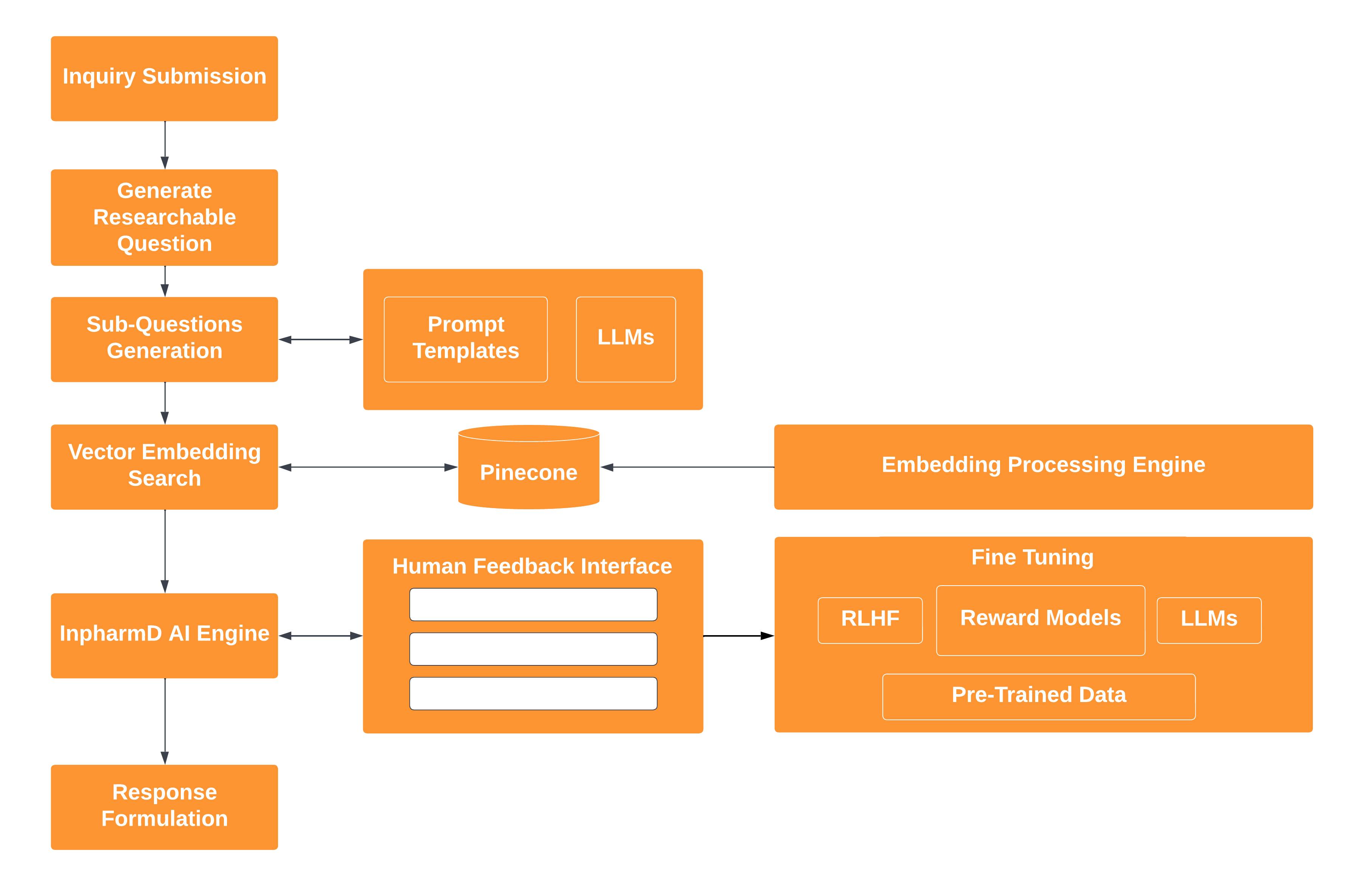

Process the Inquiry

The inquiry process is a complex interaction between advanced AI capabilities and sophisticated language modeling techniques. At its core, the engine represents the initial phase of this process, where it thoroughly analyzes each inquiry received from the user.

Following this, drug information pharmacists formulate a researchable question based on the inquiry, advancing to the sub-question generation phase. In this step, the InpharmD AI engine utilizes prompt templates and advanced LLMs to systematically generate sub-questions aligned with the researchable question. These derived sub-questions then progress to the subsequent step—vector embedding search.

During this phase, the search query utilizes Pinecone, an advanced vector database, incorporating approximately 2 billion vector embeddings extracted from InpharmD’s collection of 30 million literature documents. Subsequently, the generated query is sent to the InpharmD AI engine. InpharmD AI selected Pinecone thanks to its seamless scalability, swift query results, and impressive low latency, making it an essential asset in InpharmD’s data-driven operations, thereby enhancing efficiency and productivity.

This juncture is pivotal, as it allows the AI engine to unravel the full potential of its capabilities by comprehensively deconstructing and understanding the linguistic and contextual intricacies embedded within the inquiry.

A crucial facet of the process involves the human feedback interface, acting as an intermediary facilitating communication between the AI engine and the fine-tuning phase. In the fine-tuning phase, pre-trained data, coupled with LLMs and Reinforcement Learning from Human Feedback (RLHF) employing reward models, is harnessed for meticulous refinement. This process culminates in the AI Engine's formulation of a nuanced response, representing the apex of the intricate inquiry process.

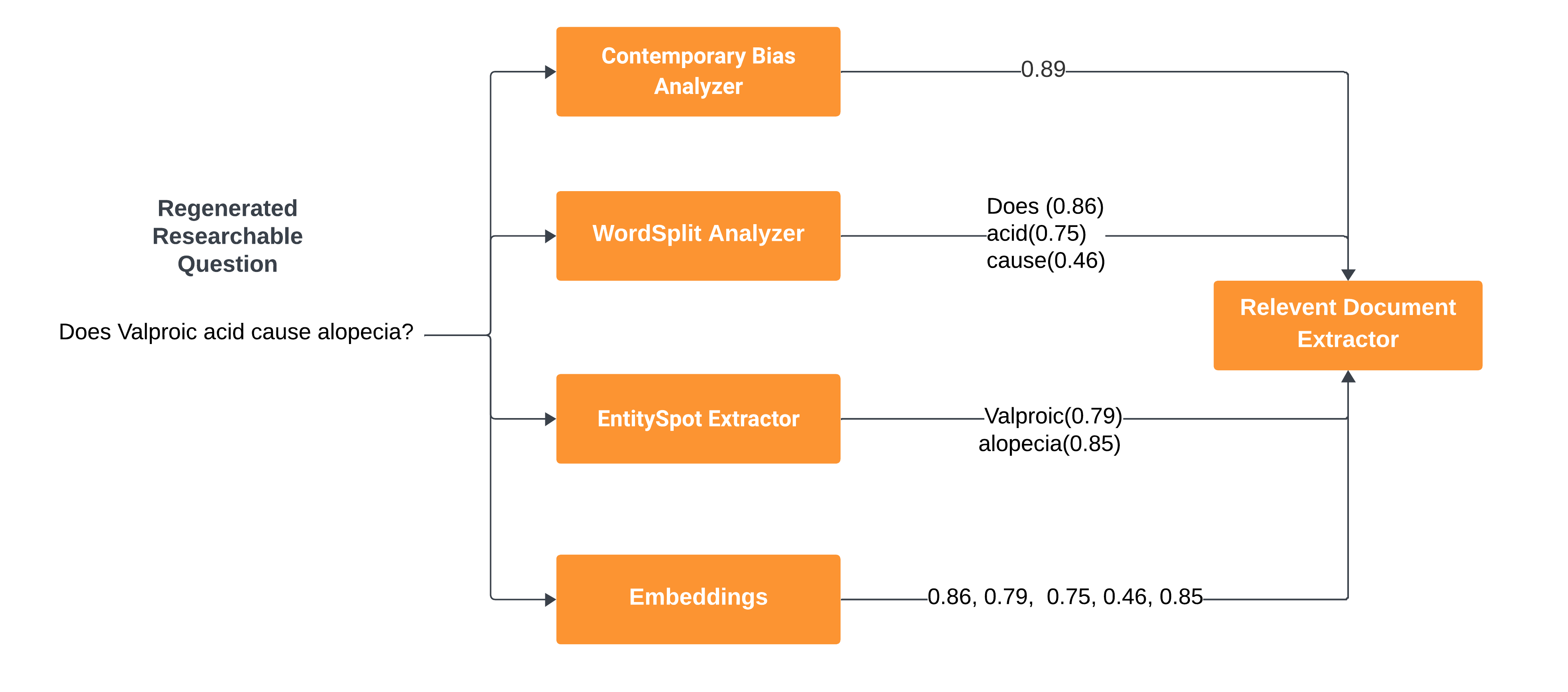

Embedding Semantic Search

InpharmD employs vector embedding semantic search to retrieve pertinent documents related to an inquiry. This involves feeding the inquiry into InpharmD’s embedding processing engine, a process carried out on company servers using graphics processing unit (GPU) acceleration. This approach enhances performance, security, and cost-efficiency. The resulting embedding is stored in Pinecone, InpharmD’s vector database, segmented per-user.

Vector databases are essential for LLMs. They help find the right information from huge sets of data quickly and accurately. They do this by turning text into special codes called vectors that allow InpharmD to compare and search through multiple documents rapidly. This enables finding the most pertinent information much faster than using older search methods. Moreover, these codes possess the ability to comprehend the meaning of the text, enhancing search functionality and aiding in more sophisticated language-related tasks.

When utilizing the AI search tool alongside RLHF, InpharmD embeds the clinician's refined question and query the Pinecone vector database for inquiries that share semantic similarities. This method holds significant potential as it allows InpharmD to identify documents that align conceptually with the original inquiry. Additionally, InpharmD leverages prior drug-information pharmacist (researcher) insights, such as rewards, when feasible, to refine and focus the semantic search.

Process the Resolution

The resolution process consists of three key components: an AI engine, summary generation, and pharmacist review.

- AI Engine: It begins by analyzing the clinician’s inquiry using language modeling techniques to comprehend and process the request.

- Summary Generation: The AI engine produces a condensed version of the information it intends to offer to the drug-information pharmacist. This summary acts as a brief overview of the content.

- Researcher's Review: The drug-information pharmacist examines the summary provided by the AI engine. If the summary aligns with the inquiry, the researcher evaluates it and prepares a response. If the summary doesn't match the inquiry, the researcher disregards it.

Aggregate the Response

The algorithm aggregates different parts of clinical literature to deliver an evidence-based, customized response. Components of the response include: customized text, condensed clinical guidelines, expert opinions, randomized controlled trials, case reports, comprehensive tertiary analyses, and citations.

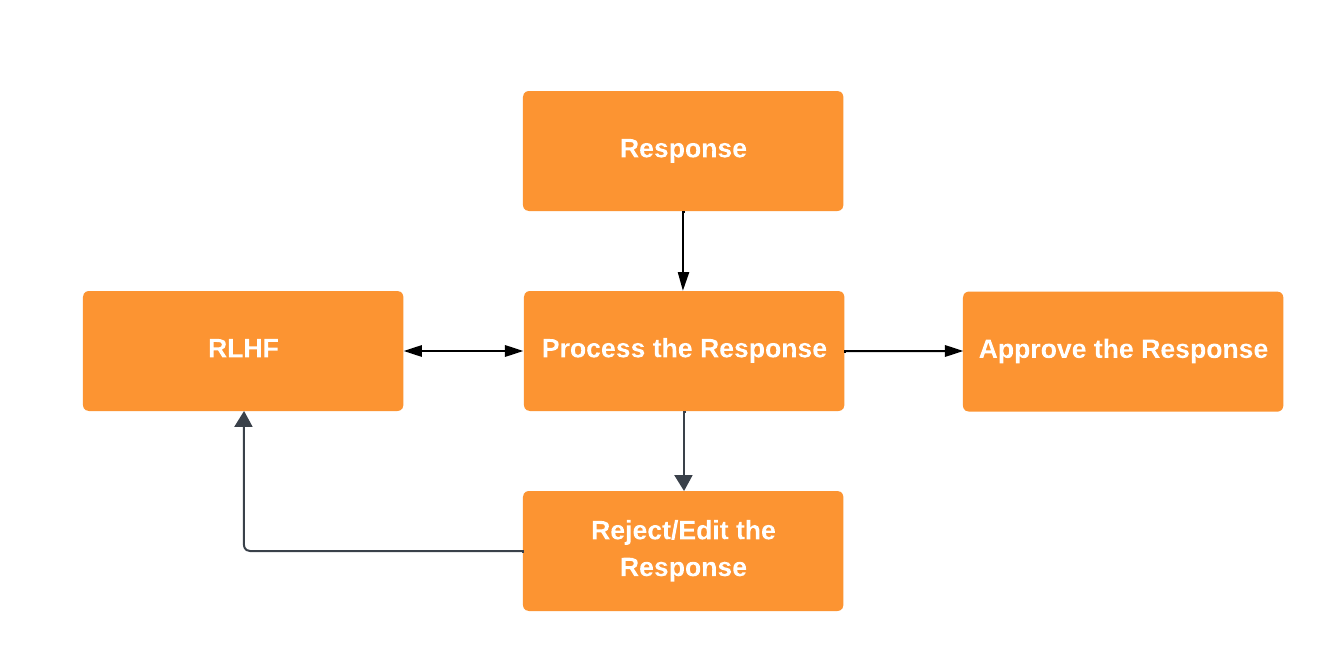

The reviewer combines the AI-generated response with a method called RLHF. This method involves using special templates and techniques to gather and verify responses through human review. This process ensures responses are accurate and relevant, aiming to avoid errors.

If the response fits the inquiry, the researcher approves it. If there are mismatches then the researcher can decline it or request for modifications.

Final Response Delivery

After gathering information, researchers condense the collected documents and extract a meaningful answer using the AI's summarization parsing technology. This tailored response, derived from carefully selected data, is then provided to address the clinicians' inquiry.

This methodical process, powered by AI, allows InpharmD to deliver precise responses based on refined and data-driven insights when healthcare providers ask questions.

Share